Why AI Coding Agents Struggle Without Context and How Context Engineering Improves Accuracy

AI coding agents are rapidly becoming part of everyday software development. From generating boilerplate and refactoring legacy code to assisting with feature development, these tools promise significant productivity gains. Yet many teams encounter a recurring frustration: AI-generated code often looks correct, compiles successfully, and even passes basic tests—only to fail in real-world usage.

The issue is rarely the intelligence of the model. More often, it’s the lack of complete, structured context.

Why AI Coding Agents Struggle Without Context

AI coding agents operate entirely on the information available at the moment a task is requested. Unlike human developers, they do not possess long-term memory, implicit architectural understanding, or awareness of past design decisions unless those details are explicitly provided.

In modern software teams, context is fragmented across many systems and conversations:

• Product requirements live in ticketing systems

• Architecture decisions exist in outdated or informal documentation

• Business logic is embedded deep within legacy code

• Constraints are discussed verbally or in chat tools

When AI coding agents are asked to build or fix features without this full picture, they compensate by guessing. The result is code that may technically function, but fails to align with the system’s intent.

Context Fragmentation in Modern Development Teams

Context fragmentation is not a new problem—it has always existed in software development. What AI coding agents do is expose it more clearly.

Developers naturally reconstruct context through experience: they remember past incidents, understand why certain shortcuts exist, and know which rules are flexible versus absolute. AI systems, however, cannot infer this history unless it is explicitly provided.

As teams grow and systems become more distributed, context becomes harder to centralize. This fragmentation is one of the primary reasons AI-assisted development feels inconsistent across projects.

Real-World Failure Scenarios

Teams relying on AI coding agents without sufficient context often see the same failure patterns.

A feature implementation compiles cleanly but violates domain rules. A refactor improves readability while breaking downstream dependencies. A bug fix addresses symptoms without correcting the root cause.

These failures are subtle and often surface late in the development cycle, increasing review time, introducing regressions, and reducing trust in AI-assisted workflows.

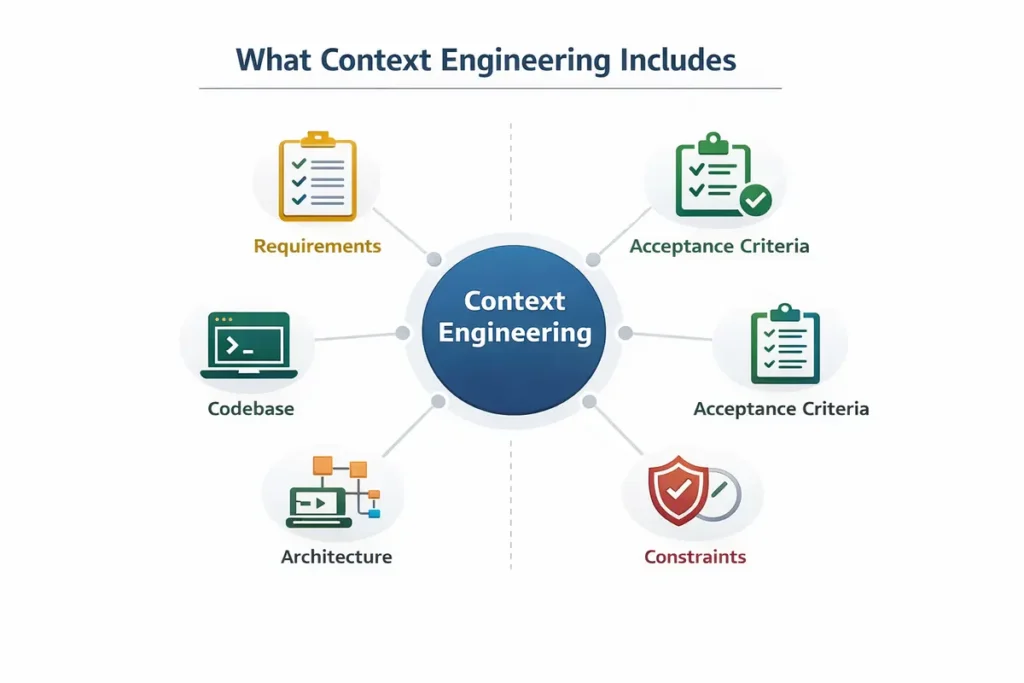

What Is Context Engineering?

Context engineering is the practice of deliberately preparing, validating, and structuring the information an AI system needs before code generation begins. Rather than treating prompts as disposable inputs, context engineering treats context as a first-class engineering artifact.

In practice, context engineering involves assembling functional requirements, identifying relevant sections of the codebase, capturing architectural patterns, and making constraints explicit.

Why Prompting Alone Doesn’t Scale

Prompting works well for small, isolated tasks. However, as system complexity increases, prompting alone breaks down.

Large applications span multiple services, databases, integrations, and deployment environments. No single prompt can capture this complexity reliably.

Context engineering addresses this limitation by externalizing system knowledge into structured inputs that AI coding agents can consistently reference.

How Context Engineering Improves AI Coding Accuracy

When structured context is provided upfront, AI coding accuracy improves dramatically.

Ambiguity is reduced, assumptions are eliminated, and generated code aligns more closely with system expectations. Teams often see fewer retries, cleaner pull requests, and faster iteration cycles.

Context as an Engineering Artifact

Treating context as an engineering artifact means it is versioned, reviewed, and improved over time. Just like code, context benefits from iteration and shared ownership.

By formalizing context, teams reduce cognitive load and enable AI systems to operate more reliably.

Why Better Models Alone Don’t Solve the Problem

It is tempting to believe that newer or more powerful AI models will automatically fix accuracy issues. In practice, better models still depend on the quality of their inputs.

Without structured context, even advanced models produce inconsistent results. Context quality—not model capability—becomes the limiting factor.

The Future of AI-Assisted Development

As AI coding agents continue to mature, teams will shift their focus from generation speed to reliability.

Those who invest in context engineering will gain a sustainable advantage by producing predictable, maintainable AI-generated code.

Final Takeaway

AI coding agents are powerful tools, but they are only as effective as the context they receive. Context engineering transforms AI-assisted development from an experimental practice into a reliable workflow.